News

Industry News > All the news

20 Dec 2025

Season's Greetings from eyesmart.com.au

19 Dec 2025

Safilo Acquires 25 Percent Stake in Inspecs Group

18 Dec 2025

Games and Cultural Sectors Get Vision-Impaired Accessibility Standards for XR

17 Dec 2025

New Zealand Study Finds Hearing and Vision Tests Drop in Aged Care

16 Dec 2025

Safilo Withdraws From Race to Acquire UK Eyewear Group Inspecs

15 Dec 2025

EssilorLuxottica Expands Med-Tech Footprint and Smart Eyewear Innovation

12 Dec 2025

ZEISS Meditec CEO Steps Down Following Compliance Breach as Company Reports Solid Annual Growth

11 Dec 2025

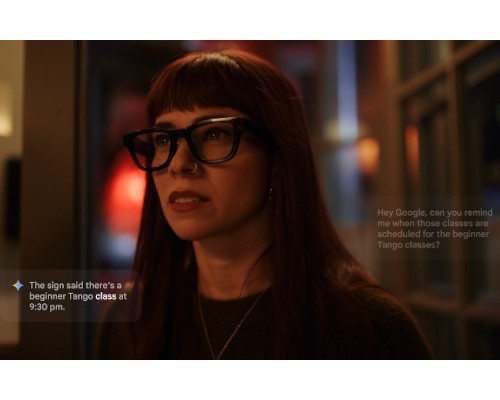

Google Returns to Smart Eyewear Market with AI-Powered Glasses in 2026

10 Dec 2025

ACO Opens Registrations for 2026 Certificate Courses, Introduces Advanced Ocular Therapeutics Program

09 Dec 2025

Lindberg Opens First Australian Boutique in Sydney CBD